Glad to see Bender cite Wittgenstein, because he already settled this debate 100 years ago while fighting against the descriptivists whose basic argument was that you can understand anything if you describe it in sufficient detail, and that our lack of understanding of any topic derives from our not having enough facts about the subject (as an aside I see a very strong analogy to the descriptivists and those who think AI is "alive"). Wittgenstein made a lot of good counterarguments, but the one that turned out to be most famous and most illustrative was where he said that the way we learn what the color red is is by someone else pointing to a red object and saying "this is red." He was trying to argue that meaning derives from agreement about meaning and nothing more. We use language, at least in part, to give others access to our inner thoughts, and without agreement on words' meanings, then others completely lack access to our inner selves. Where I think that the Mannings of the world completely lose me is in statements of this sort: Um, what? What if I said in response, "Fuck you." It would probably taken as being pretty hostile, right? But I can think of a at least three other use cases that lack any hostility. Say, gentle ribbing; an expression of disbelief; and an expression of desire. One could think of almost any phrase, but especially any idiomatic phrase, for which there are many, many meanings, and the only way to disambiguate the meaning is by the "marginal" information. I think this point has been made explicit in the age of text-based communication. How easy is it to interpret an email or a statement on social media as crass or aggressive, when the author was trying to be lighthearted? There's a famous experiment in neuroscience where you apply a force to a transducer, and that exact force is then applied to another person sitting across from you by another transducer. Then the person to whom the force was applied is asked to replicate exactly what they felt, which is in turn felt by person 1, who does the same, and so on. It spirals. Every time. Because we're hard wired to perceive things to be worse than they are when they're done to us and we respond in kind. The marginal information is the only thing that keeps us from not killing each other. I literally can't even wrap my head around someone believing that non-verbal context around verbal information doesn't just inform but in many cases defines the meaning of the verbal information. It's like not agreeing on the color red. What kind of world will we live in where we can't agree on the meaning of words, because the speaker (i.e. the robot) doesn't have an inner self to try to give access to? Edit: Just to add, I've recently begun using ChatGPT. I like it. It does some wonderful things. It helped me to design an experiment by cutting my overall search time from probably 5-10 hours on PubMed and Google to like 15-20 minutes of chat. I'm not against GPT specifically or AI generally. I'm against calling it conscious or anything other than really powerful computer code. It's the moral equivalent of MS Word to me, which also boosts my productivity immensely relative to pen and paper.he allowed, humans do express emotions with their faces and communicate through things like head tilts, but the added information is “marginal.”

Kahneman and Tversky did batteries of studies to quantify that eighty percent of communication is nonverbal. I mean, you either acknowledge that other experts have the data you need or you present yourself as the ultimate authority on everything.he allowed, humans do express emotions with their faces and communicate through things like head tilts, but the added information is “marginal.”

I think a huge problem for the debate on whether robots and AI are conscious in any meaningful sense is less about the capacity of the machine in question and much more about the fact that consciousness is a hard problem in itself. We simply lack a good enough definition of consciousness to make any meaningful tests for consciousness that are based on real theory. The general philosophical definition is that a conscious being has a subjective experience of the world. Or to quote the common question “is it like something to be an X?” Does it have an internal thought process, will, wants, and desires? Does it experience things subjectively? Does the robot experience something like pain when it falls off a platform? But how do you define pain? An amoeba will react negatively to a stimulus, and it will be attracted to others. But if it encounters water too hot for it and moves away is this a biological equivalent of machine learning, or is it pain? Keep in mind that amoebas have no CNS or brains, just a single cell. To my mind the amoeba could be doing either of these. It could be doing exactly like the robot falling off a platform. “This event is negative, avoid.” Or it could experience pain.

THIS is the huge problem: laypeople presuming that their fuzzy understanding of consciousness is appropriate to the discussion of large language models. I am not an expert on consciousness. I've read enough experts on consciousness and AI to know that the people who do this shit for a living? See no controversy at all.To my mind

It’s called the “hard problem” for a reason. Consciousness and free will are extremely hard to provide good definitions for, and in fact there are good philosophical arguments on how we — Humans — may not really have either one. Now if we can’t be sure that WE are conscious, that WE have the ability to exercise free will, is really not possible to make coherent arguments about whether anyone or anything else does. It ends up something like arguing about souls — and it’s amazing how groups of living things we didn’t historically see as “equal to us” were seriously considered to maybe not even have a soul. There are historical arguments during the pre-civil war era arguing about whether Black people had souls. We argue in much the same way about animals — are animals “conscious” which TBH is a stand in for the discussion people don’t want to have about rights. If you can deny souls or the modern equivalent of consciousness to a being whether it’s an amoeba, a cow, a robot, an alien or a human, then you don’t have to give them rights. My interaction on the topic is fairly shallow. Mostly reading about it, although I’ll admit that science fiction has shaped my thinking as well.

Philosopher walks into a bar. Says to the bartender "I have a proposal for you. I will stand in the middle of the bar with a full glass of beer. Whenever someone says 'drink' I will take a sip. I will then walk halfway to the wall. I will pay you for the first glass of beer if you pay to keep my glass full." Bartender says "oh no you don't, pesky philosopher! For I am an educated man and I have learned that if you walk halfway towards something with every step you will only approach it asymptotically and never truly reach it and I am far too clever to provide you with endless beer!" An engineer, deep in his cups, says "Charge him for three beers." The bartender and philosopher both turn to him, annoyed, because engineers exist to annoy everyone. "This room is what, forty feet? He's gonna go about twenty the first time, about ten the second time, about five the third time, about two and a half the fourth time, about a foot and change the fifth time, about six inches the sixth time and by the seventh time he won't be able to raise his glass without touching the wall." The engineer then raises his hand to quell the objections of the educated. "Yeah yeah yeah, shut up. He's a drunk, he's already wobbly on his feet, he can't stand up straight to within half an inch, he's got eight sips between the middle of the room and the wall unless his sips are truly heroic he's going to have a hell of a time drinking more than a pint before his nose brushes." The philosopher changes the subject to the qualia of color and the engineer grumbles 'fucking green" into his beer. __________________________________________________________________________ This is not a philosophical problem. It is an engineering problem. It is an engineering problem by virtue of it being proposed, investigated and executed by engineers. There are metrics. There are boundary conditions. There is data. The way to answer "do machines have souls" is to define "soul", not take your existing data and using it as an argument to neutralize the humanity of humans. Am I alive? yes. Are you alive? yes. Are bugs alive? yes. Is software alive? No. Not by any definition we have ever used. Ever. In the history of life on earth. If your interaction on this topic is not shallow, there's no controversy at all. None. QED, if your conclusions are different than the experts, your conclusions are wrong.To my mind

IMO life on earth displays a continuum for us that settles this philosophical debate and we ignore it because the truth hurts. Leaf-cutter ants mindlessly gather leaves, wolves mindlessly hunt deer, and we mindlessly generate text. There is no ghost in the machine. There is only the machine. We are very much stochastic parrots. Meaning is relationship, choosing the correct word cannot be done without it. It’s not a thing on the other side of a mystical threshold. I highly recommend Hofstadter‘s Creative Concepts and Fluid Analogies.

Yeah but that's an argument for 'mind' not an argument for 'life.' You're arguing there's no 'mind' and that what we consider 'mind' is a gradient. 'life', on the other hand, is a clear bright line: if you have a metabolism, if you respond to stimulus, if you reproduce, if you can do all of the above without the presence of a host, you are alive. The nerds are all about "life" doesn't matter because "mind" is whatever we say it is.

Couldn’t that definition apply to anything electronic? My cell phone matabolizes energy from a battery which i charge from a wall socket. It responds to commands I give it via a touch screen. Other than needing a factory to reproduce, it’s meets the definition.

Your phone does not metabolize. Your phone releases energy the same way air escapes from an empty balloon, the same way water rushes out from a dam, the same way a ball bounces down the stairs. Your phone transforms one form of energy into another - the chemical energy of a battery becomes electrical energy becomes electromagnetic and acoustic energy. However, the phone lacks any method whatsoever to source its own energy. That's the definition between "uses" and "metabolizes" energy - if I take a rock and drop it off a skyscraper, it will increase in speed to hundreds of miles an hour. If I take a bird and drop it off a skyscraper it will fly away and find something to eat. If I take a clam and drop it off a skyscraper it will suck into its shell further (while increasing in speed to hundreds of miles an hour) - what stimulus it gets it delivers what response it has. Sure, let's make that solar phone you're already thinking about. If I have a solar calculator it can theoretically run forever, "metabolizing" sunlight. HOWEVER it will respond to its environment, not stimulus - when its environment impinges on one of its keys it executes a logic routine. An decrease in light will not cause it to scurry about. Okay, let's incorporate elements of BEAM robotics and photovores.. My photovore solar calculator now: (A) responds to stimulus (B) "metabolizes." BUT And thus the line between "alive" and "not alive" landed in 1935 on viruses, which: - respond to their environment, not stimuli (a change in chemical condition will change the chemical makeup of the virus - if it's in the wrong pH environment it will die, if it touches the right protease it will release its DNA payload, etc) - have no metabolism - reproduce (the main thing they do!), but not without an external mechanism to do so, ie the host So. Going on 90 years, "if it can't reproduce on its own it's not alive" has been a bright line, even when it comes to things we thought were alive for 40 years or so. Michael Crichton's debut novel The Andromeda Strain dealt with this at length: viruses even mutate, despite not being alive, so if we accidentally bring in some space bug do we have to worry about murdering it? The answer, which Crichton explains, is "only if it's actually a bug." Let's experiment. What if "the environment" is inside a computer? The study of artificial life began with Ulam and von Neumann who went from "crystals" to "algorithms." John Conway boiled it down to just the math and created Conway's Life. It's really fucking cool? but if we don't build it somewhere to live, it doesn't. And this is why I lose patience with these discussions: the study of artificial life is storied, well-studied, and from my home town. Nobody in it, however, is interested in making Pinocchio, they're all trying to learn things about stuff that is alive by studying stuff that isn't. The behavior of starlings, for example, can be distilled down to a very simple instruction set that has applications in autonomous vehicular behavior, which is a whole lot different than "Fishing lure maker gets hook stuck in own cheek." None of the people in these fields? Wonder if this thing is alive.Other than needing a factory to reproduce,

Welcome, We are a USA and Euro-based company specializing in the ultimate supply of products and services at wholesale prices for small, medium, and large businesses and we recognize the complexities, pressures, and challenges of running various businesses. Note: We don’t manufacture these products, we buy them from a network of highly qualified and trusted manufacturers.

There's simply too many people who can't handle this tech responsibly. If the OP isn't troubling enough for you, scroll down through the comments. This is rapidly accelerating the self-isolationism already established by the internet writ large. Maybe it's only a small minority of folks willing to treat LLMs as human? Too early for stats, probably. And a part of me hopes that this shit ruins the entire internet. It took Facebook and Twitter years to hit 100 million users. ChatGPT did it in two months. Wish I had more time today, sorry. edit: btw thanks to b_b for badging this, woulda done it myself but I'm out.

"You can call me dad, if you like". "uwu!! i am so happyyyyyy" "my best friend crashed, hopefully the company can restore them"

They’re great at mimicry and bad at facts. Why? LLMs, like the octopus, have no access to real-world, embodied referents.

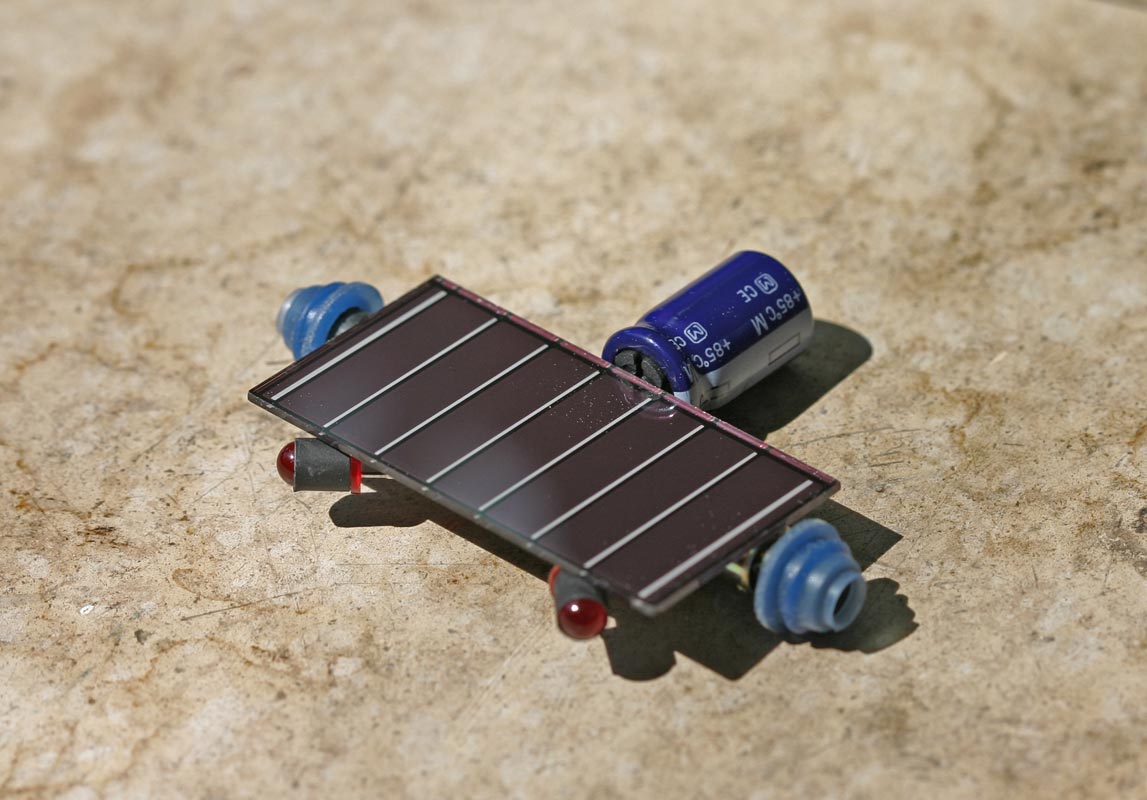

wouldn’t this change once the AI is embedded in something like this?

Not in the slightest. You watch that and you think "ramp, ramp, ramp, ramp, box, leap onto platform, leap onto ground", etc. Atlas goes "lidar pulse, spacial data, G-code, lidar pulse, spacial data, G-code, lidar pulse, spacial data, G-code" and that's just the first few billionths of a second because Atlas is a pile of NVidia Jetson components running at around 2.2 GHz. THERE IS NO PART OF ATLAS' PROGRAMMING THAT UNDERSTANDS "RAMP." NOTHING Boston Dynamics does has any contextualization in it whatsoever. It has no concept of "ramp" or "table" because there is absolutely no aspect of Boston Dynamics' programming that requires or benefits from semantics. Now - the interface we use to speak to it? It's got semantics, I'm certain of it. But that's for our convenience, not the device's. You say "something like this" but you don't really mean that. You mean "something shaped like me." You're going "it's shaped like me, therefore it must be like me" because anthropomorphism is what we do. Study after study after study, humans can't tell the difference between an anthropomorphic mannequin responding to a random number generator vs. an anthropomorphic mannequin responding to their faces. Riddle me this - would you have asked "wouldn't this change once the AI is embedded in something like this?" if "something like this" is an airsoft gun hooked up a motion detector? It's the same programming. It's the same feedback loops. One is more complex than the other, that's all. More importantly, LLMs such as ChatGPT deliberately operate without context. Turns out context slows them down. It's all pattern recognition - "do this when you see lots of white" is hella faster than "lots of white equals snow, do this when you see snow". Do you get that? There's no snow. Snow doesn't exist. Snow is a concept us humans have made up for our own convenience as far as the LLMs are concerned, the only thing that matters is adherence to the pattern. All models are wrong; some are useful. - George Box The object of the article is to determine the usefulness of the model on the basis that all models are wrong. Your argument is "won't the models get less wrong?" No. They never will. That's not how they work. If you try to make them work that way, they break. The model will get better at fitting curves where it has lots of data, and exactly no better where it doesn't have data, and "context" is data that it will never, ever have. Lemoine paused and, like a good guy, said, “Sorry if this is getting triggering.” I said it was okay. He said, “What happens when the doll says no? Is that rape?” I said, “What happens when the doll says no, and it’s not rape, and you get used to that?” When we have this discussion about video games, it's just good clean masculine fun. The Effective Altruists among us can go "it's just a video game" because we can all see it's just a video game. But since the Effective Altruists among us don't actually believe all humans are worth being treated as human, they go "but see look if it's human-like enough to trigger your pareidolia, then obviously machines should have more rights than black people." You're effectively asking "but once we're all fooled into thinking it's alive, shouldn't we treat it as if it were?" And that's exactly the point the article is arguing against.A few minutes into our conversation, he reminded me that not long ago I would not have been considered a full person. “As recently as 50 years ago, you couldn’t have opened a bank account without your husband signing,” he said. Then he proposed a thought experiment: “Let’s say you have a life-size RealDoll in the shape of Carrie Fisher.” To clarify, a RealDoll is a sex doll. “It’s technologically trivial to insert a chatbot. Just put this inside of that.”

You say "something like this" but you don't really mean that. You mean "something shaped like me."

no, I didn’t much focus on “shape like me,” but rather on the ability to traverse through the world allowing AI to gather irl data. Just a chariot for data gathering was my thought.

A Roomba traverses the world gathering IRL data. The difference is the Roomba can incorporate that data, the LLM can't - the basic make up of "large language models" is "giant dataset" + "careful, empirical training" = "pantomime of intelligence." The response of the model must be carefully, iteratively tuned by technicians to get the desired results. It's that iterative tuning that makes it work, and it is exquisitely sensitive to context.

I agree with a lot of what you're saying, but wonder about the claim that we think "ramp, ramp, box" watching this video. I think that discussions about what qualifies as artificial intelligence benefit from more careful consideration of what we consider to be our own intelligence. We do think ramp, ramp, box, but we can only think that after taking in a ton of visual data and attempting to make sense of it with our previous experience. We have a heuristic for ramp developed over a similar feedback loop, like gently correcting your kid when they call a raccoon "kitty". What if an extension of LLMs was developed that made use of long term memory and the ability to generate new categories? Why do you think that they'll never be able to work that way? And moreover, what is the model trying and failing to replicate in your mind? An individual human speaker, or some sort of ideal speaker-listener, or something else?

The context is "box jump." You can google that. It's a cross-training thing. The way humans codify "jump" is "high jump" "long jump" "rope jump" etc. "jump" is the act of using your legs to leave the ground temporarily. We use it as a noun ("he didn't make the jump"), a verb ("he jumped rope"), as a metaphor ("that's quite a logical chasm to jump"). Logically and semantically, we parse the actions performed by the robot in terms of discrete tasks. Those tasks are assigned names. Those names have contexts. The robot, on the other hand, "thinks" G1 X51.2914 Y54.8196 Z0.416667 E0.06824 F500 G1 X52.4896 Y54.3121 Z0.433333 E0.0681 F500 G1 X53.5134 Y53.5134 Z0.45 E0.06795 F500 G1 X54.294 Y52.4792 Z0.466667 E0.06781 F500 G1 X54.7793 Y51.2806 Z0.483333 E0.06767 F500 G1 X54.9375 Y50 Z0.5 E0.06753 F500 G1 X54.7592 Y48.7248 Z0.516667 E0.06738 F500 G1 X54.258 Y47.5417 Z0.533333 E0.06724 F500 G1 X53.4692 Y46.5308 Z0.55 E0.0671 F500 G1 X52.4479 Y45.7601 Z0.566667 E0.06696 F500 G1 X51.2644 Y45.281 Z0.583333 E0.06681 F500 G1 X50 Y45.125 Z0.6 E0.06667 F500 G1 X48.741 Y45.3012 Z0.616667 E0.06653 F500 G1 X47.5729 Y45.7962 Z0.633333 E0.06639 F500 G1 X46.575 Y46.575 Z0.65 E0.06625 F500 G1 X45.8142 Y47.5833 Z0.666667 E0.0661 F500 G1 X45.3414 Y48.7517 Z0.683333 E0.06596 F500 G1 X45.1875 Y50 Z0.7 E0.06582 F500 G1 X45.3615 Y51.2429 Z0.716667 E0.06568 F500 G1 X45.8503 Y52.3958 Z0.733333 E0.06553 F500 G1 X46.6191 Y53.3809 Z0.75 E0.06539 F500 G1 X47.6146 Y54.1317 Z0.766667 E0.06525 F500 G1 X48.7679 Y54.5982 Z0.783333 E0.06511 F500 G1 X50 Y54.75 Z0.8 E0.06496 F500 G1 X51.2267 Y54.5781 Z0.816667 E0.06482 F500 G1 X52.3646 Y54.0956 Z0.833333 E0.06468 F500 G1 X53.3367 Y53.3367 Z0.85 E0.06454 F500 G1 X54.0775 Y52.3542 Z0.866667 E0.06439 F500 G1 X54.5378 Y51.2159 Z0.883333 E0.06425 F500 G1 X54.6875 Y50 Z0.9 E0.06411 F500 G1 X54.5177 Y48.7895 Z0.916667 E0.06397 F500 G1 X54.0415 Y47.6667 Z0.933333 E0.06382 F500 G1 X53.2925 Y46.7075 Z0.95 E0.06368 F500 G1 X52.3229 Y45.9766 Z0.966667 E0.06354 F500 G1 X51.1997 Y45.5225 Z0.983333 E0.0634 F500 G1 X50 Y45.375 Z1 E0.06325 F500 G1 X48.8057 Y45.5427 Z1.01667 E0.06311 F500 G1 X47.6979 Y46.0127 Z1.03333 E0.06297 F500 G1 X46.7517 Y46.7517 Z1.05 E0.06283 F500 G1 X46.0307 Y47.7083 Z1.06667 E0.06268 F500 G1 X45.5829 Y48.8164 Z1.08333 E0.06254 F500 G1 X45.4375 Y50 Z1.1 E0.0624 F500 G1 X45.603 Y51.1782 Z1.11667 E0.06226 F500 G1 X46.0668 Y52.2708 Z1.13333 E0.06211 F500 G1 X46.7959 Y53.2041 Z1.15 E0.06197 F500 G1 X47.7396 Y53.9152 Z1.16667 E0.06183 F500 G1 X48.8326 Y54.3567 Z1.18333 E0.06169 F500 G1 X50 Y54.5 Z1.2 E0.06154 F500 G1 X51.162 Y54.3366 Z1.21667 E0.0614 F500 G1 X52.2396 Y53.8791 Z1.23333 E0.06126 F500 G1 X53.1599 Y53.1599 Z1.25 E0.06112 F500 I don't. The difference is, I work with g-code. I work with servos. I work with stepper motors. I work with sensors. I work with feedback. I work with the building blocks that allow Boston Dynamics to do its magic - and it's not magic, and it certainly isn't thought. It's just code. I know enough to know I don't know much about artificial intelligence. But I also know more than most any counter-party at this point for the simple fact that I understand machines. Most people don't, most people don't want to. What you're doing here is taking my arguments, disregarding them because you don't understand them, and operating from the assumption that nobody else does, either. And here's the thing: this stuff isn't hard to learn. It's not hidden knowledge. There's nothing esoteric about it. Every researcher in machine intelligence will tell you that machines aren't intelligent, and every chin-stroking pseudointellectual will go "but enough about your facts, let's talk about my feelings." And that is exactly the trap that everyone of any knowledge in the situation has been screaming about since Joseph Wiezenbaum argued ELIZA wasn't alive in 1964. It's a fishing lure. It's about the same size as a caddis fly, it's about the same shape, and it shimmers in a similar fashion? But it's never ever ever going to do more than catch fish, and woe be unto you if you are a trout rather than an angler. No, we don't. We have a semantic language that contains logical and contextual agreement about the concept of "ramp." It breaks down, too - is a hot dog a sandwich? Thing is, our days don't come apart when we disagree about the characterization of "sandwich." We correct our kid when they call raccoons "kitties" because the raccoon is a lot more likely to bite them if they try and pet it - if it's a pet raccoon we're less likely to do that, too. When Boo calls Sully "Kitty" in Monsters Inc we laugh because he's obviously not a kitty, but Boo's use of the descriptor "kitty" gives us Boo's context of Sully, which is as a cute fuzzy thing to love, as opposed to a scary monster. More than that, as humans we have inborn, evolved responses that we're just starting to understand - the "code" can be modified by the environment but the starter set contains a whole bunch of modules that we rely on without knowing it. Atlas sees position, velocity and force. That's it. Then it wouldn't be an LLM. It's a look-up table. That's all it is. That's all it can be. You give it a set of points in space, it will synthesize any interpolated point in between its known points. It has no "categories." It is not a classifier. It cannot be a classifier. If you make it operate as a classifier it will break down entirely. It will never hand a squashed grasshopper to Ally Sheedy and say "reassemble, Stephanie". What you're asking for is intuitive leaps, and the code can not will not is not designed for is not capable of doing that. Because literally everyone who works with markov chains says so? You're commenting on an article that spends several thousand words exactly answering this question. That's literally what it's about: researchers saying "it can't do this" and the general public going "but it sure looks like it does, clearly you're wrong because I want this to be true." None of this "in my mind" nonsense - this isn't my opinion, or anyone else's opinion. This is the basis by which LLMs work: they don't think, they don't feel, they don't intuit. They synthesize between data points within a known corpus of data. That data is utterly bereft of the contextualization that makes up the whole of human interaction. There's no breathing life into it, there's no reaching out and touching David's finger, there's no "and then a miracle occurs." The difference between Cleverbot and GPT4 is the difference between Tic Tac Toe and Chess - the rules are more sophisticated, the board is larger, it's all just data and rules. A chess board is six types of pieces on 64 squares. It does not think. But suppose it was suddenly six squidillion types of pieces on 64 gazillion squares - would it think? Complexity does not equal intelligence. It never will.I agree with a lot of what you're saying, but wonder about the claim that we think "ramp, ramp, box" watching this video.

G1 X50 Y55 Z0.4 E0.06838 F500

I think that discussions about what qualifies as artificial intelligence benefit from more careful consideration of what we consider to be our own intelligence.

We have a heuristic for ramp developed over a similar feedback loop, like gently correcting your kid when they call a raccoon "kitty".

What if an extension of LLMs was developed that made use of long term memory and the ability to generate new categories?

Why do you think that they'll never be able to work that way?

And moreover, what is the model trying and failing to replicate in your mind?

I guess what I'm getting at is the idea that one could take an equally reductionist view of the human mind, it's just that our brains are optimized beyond the point of being possible to interpret. We see, we hear, we feel, we smell, and all that information is plugged into a complex logical system, along with our memories, categories, and any evolved instincts to dictate our actions. And I think that the systems you're describing in the paragraph preceding this quote don't lack computer analogs. If you'd like to get into the technical weeds of that I'd be interested in pursuing it. What about Christopher Manning, the other computational linguist mentioned in this article? I should be more precise about what I'm trying to say, cause I'm certainly not one of those nuts who believes that LaMDA or ChatGPT are sentient. I'm engaging with you because you seem knowledgeable on a subject which I find my self at odds with many really smart people that I agree with on lots of other stuff. The central disconnect that I'm interested in learning more about is the idea of humans as exceptional in deserving of our respect and compassion. In this article this stance is presented as an almost a priori abhorrent view of humanity, one that should be met with a sigh and look towards the camera. I'm gathering that you agree with Bender in wanting to posit humanity as an axiom. I kind of take a panpsychist view on consciousness, and fundamentally what I'm arguing is that that perspective allows for manmade constructs like computer programs to attain some degree of consciousness. I'm curious if/where the disconnect (touching David's finger) arises there in your view, or if we're simply arguing from different sets of premises. With all that being said, I can absolutely understand a lack of interest in engaging on this topic or seeing it as intellectually frivolous given that we aren't even able to convince people to treat other humans with respect.Atlas sees position, velocity and force. That's it.

researchers saying "it can't do this" and the general public going "but it sure looks like it does, clearly you're wrong because I want this to be true."

That's not accurate, though. If you take an "equally reductionist view" of the human mind you are deliberately misunderstanding the human mind. Reducing Atlas (or GPT) to a set of instructions isn't reductionist, it's precise. It's literally exactly how these machines work. It's not a model, it's not an approximation, it's not an analogy, it's the literal, complete truth. To be clear: we do not have the understanding of cognizance or biological "thought" necessary to reduce its function to this level. Not by a country mile. More than that, the computational approach we thought most closely matched "thinking" - neural networks - do not work for LLMs. To summarize: - We only have a rough idea how brains think - We have an explicit and complete idea how LLMs work - What we do know does not match how LLMs work - Attempting to run an LLM the way the brain works fails And all of that is hand-wavey poorly-understood broad-strokes "here are our theories" territory. We know, for example, that there's a hierarchy to sensory input and autonomous physiological response - smell is deeper in the brain than sight sound touch or taste and has greater effects on recall. Why? Because we evolved smell before we evolved binocular color vision or stereoscopic hearing and the closer to survival, the more reptilian our thought processes go. This hasn't been evolutionarily advantageous for several thousand generations and yet here we are - with the rest of our senses and thought processes compensating in various ways that we barely understand. Atlas really is as simple as a bunch of code. I say that having help set up an industrial robot that uses many of the same parts as Boston Dynamics does. It speaks the same code as my CNC mill. It goes step by step through "do I measure something" or "do I move something." GPT-whatever is the same: "if I see this group of data, I follow up with this group of data, as modified by this coefficient that gets tweaked black-box style depending on what results are desired." But don't take my word for it: Manning is wrong, and is covering up his wrongness by saying "what's meaning anyway, maaaaaan?" Arguing that kids figure out language in a self-supervised way has been wrong since Piaget. Okay, where do you draw the line? 'cuz the line has to be drawn. You can't go through your day without encountering dozens or hundreds of antibacterial products or processes, for example. I don't care how vegan you are, you kill millions of living organisms every time you breathe. Bacteria are irreducibly alive: they respond to stimulus, they consume energy, they reproduce. The act of making more bacteria is effortful. ChatGPT? I can reproduce that endlessly. The cost of 2 ChatGPTs is the same as the cost of 1 ChatGPT. Neither can exist without an exquisite host custom-curated by me. it won't reproduce, generations of ChatGPT won't evolve, there is no "living" ChatGPT to distinguish it from a "dead" ChatGPT. Why does ChatGPT deserve more rights than bacteria? I find that ethical individuals have the ability to query their ethics, and unethical individuals have the ability to query the existence of ethics. Put it this way: I can explain why humans occupy a higher ethical value than bacteria. I have explained why bacteria occupies a higher ethical value than ChatGPT. But I also don't have to explain this stuff to most people. Look: golems are cautionary tales about humans making not-human things that look and act human and fuck us all up. Golems are also not the earliest example of this: making non-humans that act human is in the Epic of Gilgamesh. It's the origin story of Abrahamic religion: god breathed life into Adam and Eve while Satan just is. This is a basic dividing line in ethics: people who know what people are, and people who don't. This isn't a "me" question. Yeah and taking an astrological view of the solar system allows for the position of Mars to influence my luck. That doesn't make astrology factual, accurate or useful. Here's the big note: How "you feel" and how "things work" are not automatically aligned. If you are wrong you will not be able to triangulate your way to right. And this entire discussion is people going "yeah, but I don't like those facts." The facts really don't care.I guess what I'm getting at is the idea that one could take an equally reductionist view of the human mind, it's just that our brains are optimized beyond the point of being possible to interpret.

We see, we hear, we feel, we smell, and all that information is plugged into a complex logical system, along with our memories, categories, and any evolved instincts to dictate our actions.

What about Christopher Manning, the other computational linguist mentioned in this article?

The central disconnect that I'm interested in learning more about is the idea of humans as exceptional in deserving of our respect and compassion.

I'm gathering that you agree with Bender in wanting to posit humanity as an axiom.

kind of take a panpsychist view on consciousness, and fundamentally what I'm arguing is that that perspective allows for manmade constructs like computer programs to attain some degree of consciousness.

- We have an explicit and complete idea how LLMs work - What we do know does not match how LLMs work - Attempting to run an LLM the way the brain works fails I don't understand how this isn't going back on your earlier claim that complexity doesn't equal intelligence? Our lack of understanding of how the brain works doesn't provide any evidence of it possessing some supernatural faculty that a computer couldn't (with currently non-existent but feasible tech) replicate. And your fourth claim here is demonstrably false: even ChatGPT is based on a neural net. Obviously this does not mean it is working the way the brain works, but it is absolutely complex enough to exhibit emergent behaviors that we could never make sense of. In fairness to you, ChatGPT could be written as a set of instructions, but that's only because it's no longer learning in its current state. The same could be said of a snapshot of a human mind. You're responding to a point that I haven't made here, and drawing a false equivalence between discussions of hardware vs software. The discussion as we began it was to imagine a sophisticated learning model placed inside hardware that allows it to gather information about the world around it, the "access to real-world, embodied referents" mentioned in the article. You have to draw the line too! Once again, I am not arguing that ChatGPT is alive, nor that it is conscious. I do believe that being alive and being conscious are not mutually exclusive, due to my beliefs about consciousness, which is clearly something we are not going to agree on. If you think that what I'm doing is the latter and not the former then it might not even matter what I'm typing here. I'm concerned that our ethical system is largely based on the idea of being nice to humans and things that are sufficiently like humans, precisely because it leads to Type 1 and Type 2 errors of being cruel to dogs or falling in love with chatbots respectively. And referring to myths in your "facts don't care about your feelings" argument walks a really bizarre line. This is a ridiculous comparison, as astrology is provably false and any theory of consciousness is necessarily unprovable one way or another. Certainly some or more plausible than others, but you need to subscribe to some idea of what consciousness is to even begin to have this discussion, and you're only espousing negative views on consciousness save for fairy-tale appeals to your feelings that humans have got it and computers don't. Panpsychism appeals to me because I agree that it's obvious that humans are conscious, and it's obvious that my dog is conscious, and it sure seems like chickens are conscious but it starts to get fuzzy around there. It would be ridiculous to lay down some arbitrary line between chickens and another species, or to decide that some particularly gifted chickens are conscious but not others. It seems equally strange to me to finally decide that viruses are conscious, but deny consciousness to other self replicating processes just because viruses have DNA. What seems most sensible to me is to then conclude that consciousness is not binary, but something all things have to some extent. This is based on how I "feel," but so is every other concept of what consciousness is. But that's what this whole discussion is about. - We only have a rough idea how brains think

Atlas really is as simple as a bunch of code.

Okay, where do you draw the line? 'cuz the line has to be drawn.

I find that ethical individuals have the ability to query their ethics, and unethical individuals have the ability to query the existence of ethics.

Yeah and taking an astrological view of the solar system allows for the position of Mars to influence my luck. That doesn't make astrology factual, accurate or useful.

"unknown" does not equal "complex." "complex" does not equal "known." What we know of "brains", regardless of its complexity, in no way parallels Markov chains. What we know of LLMs, on the other hand, is entirely Markov chains. The Venn diagram of "unknown" and "complex" are two separate circles; the Venn diagram of "known" and "LLMs" is one circle. Not saying it does. Saying you can't draw any parallels between the two because they have nothing in common. I've said that three times three different ways. Your link says "neural net" twice. It refers to lookup tables for the rest of its length. Here's IBM to clarify. That Wolfram article you didn't read lists "neural" 170 times, by way of comparison - but it actually explains how they work, and how the "neural network" of large language models is a LUT. I've been presuming you're discussing this in good faith because you said you were. You're making me doubt the veracity of that statement because no matter how many times I point out that this isn't about "beliefs" you keep using yours to underpin your logic. We were talking about Atlas because thenewgreen brought up Atlas. Before you joined the conversation I pointed out that the model does not learn once it has been trained. You keep skipping over this. And speaking as a human in a world full of humans, I'm uninterested in a new set of ethics that does not prioritize humans. Would you like to try again in a non-combative way? Because I can have this conversation ad nauseum. But I have to choose to do so.I don't understand how this isn't going back on your earlier claim that complexity doesn't equal intelligence?

Our lack of understanding of how the brain works doesn't provide any evidence of it possessing some supernatural faculty that a computer couldn't (with currently non-existent but feasible tech) replicate.

And your fourth claim here is demonstrably false: even ChatGPT is based on a neural net.

Genuine apologies for being combative. I got a little worked up at the insinuation that I am somehow trying to dispose of ethics. I do think that you could stand to be less combative yourself; even if you think I have absolutely nothing to offer you, you must think there's some value to having this discussion if you've gone this long, and I have to choose to continue as well. I did in fact read the Wolfram article when it was posted here a few weeks ago. I appreciated the more straightforward overview of the architecture in the link I shared without the expository information. Referring to a model like ChatGPT as a lookup-table is obfuscating, and that claim is not made by an experts, including in the Wolfram article. I would maybe permit that it's something like a randomized lookup table, in that if its randomization happened the same way every time then any sequence of inputs and outputs would result in the same eventual output. But that's ignoring both the randomization and the feedback loop of rereading of its own outputs, not to mention the presence of a neural net in the model. Lookup tables aren't mentioned in the article I linked at all. There's plenty of description of the tokenization and encoding of input data, which is superficially similar. The presence of the neural net, the attention scheme, and the encoding of the relative position of words in an input phrase allows for interaction between all the words in a sequence, and that interaction is tempered by all the sequences in its training data. I don't mean to skip over the fact that ChatGPT itself is not learning anymore. I agree that it's important, but I am discussing a hypothetical scenario that will become reality before long. It's perfectly capable of continuously learning from its conversations (although highly inefficiently) should OpenAI choose to do that, although there's obvious logistical reasons that they don't want a bunch of random people inputting its training data for them. To say that LLMs are entirely Markov chains is a misapprehension. An LLM like ChatGPT is not memoryless even in its current form, because of its internal feedback loop. If you would instead argue that the state we're referring to is not the most recent statement but instead the full conversation, then I would argue a human speaker IS comparable to a Markov chain in any particular conversation. The human speaker obviously differs in that they can both update their "model" over the course of the conversation and carry those updates forward into future conversations, but the hurdles for a computer model accomplishing that are logistical, not inherent. Am I missing something there? Even Wolfram says: When it comes to training (AKA learning) the different “hardware” of the brain and of current computers (as well as, perhaps, some undeveloped algorithmic ideas) forces ChatGPT to use a strategy that’s probably rather different (and in some ways much less efficient) than the brain. And there’s something else as well: unlike even in typical algorithmic computation, ChatGPT doesn’t internally “have loops” or “recompute on data”. And that inevitably limits its computational capability—even with respect to current computers, but definitely with respect to the brain. It’s not clear how to “fix that” and still maintain the ability to train the system with reasonable efficiency. But to do so will presumably allow a future ChatGPT to do even more “brain-like things”. ChatGPT is not a brain, but I don't think you can reasonably claim it has nothing in common with one. And I still don't see any reason why a brain-like model could not be created. I'll leave aside the ethical questions (no beliefs here!) since I don't think that we'll come to an agreement. I trust that you'll be kind to my dog, and that's all that really counts.What ChatGPT does in generating text is very impressive—and the results are usually very much like what we humans would produce. So does this mean ChatGPT is working like a brain? Its underlying artificial-neural-net structure was ultimately modeled on an idealization of the brain. And it seems quite likely that when we humans generate language many aspects of what’s going on are quite similar.

Let's stop down and discuss your rhetorical strategy real quick, then. _______________________________ I know what I'm talking about. You're welcome to disagree; regardless of your opinion of my knowledge, you have to acknowledge that I think I know what I'm talking about, and that should inform your conversation with me. On the other hand, you don't know what you're talking about. This isn't my observation, this is your profession: against my knowledge, you have presented your naivete. But more than that, you imply our positions are equal: my dozen books' worth of casual reading on the subject of artificial intelligence has no more weight than your choice to presume it's unknowable. Could I stand to be less combative? Always. Are you baiting me into combat? Irrefutably. You are disregarding my knowledge, you are discounting my experience, you are putting forth the maxim that what I know is worth nothing, since you know nothing and we're on equal footing here. You should also know that I was trained to survive depositions. As a hired, professional expert, it was not uncommon for someone in my position to be made to look like an idiot in front of a jury. This is easier than one might think because you don't need to mock and ridicule expertise, you only need to mock and ridicule the expert. You comment on how he tied his tie. You ask him about his suit. You point out the non-existent fleck of breakfast on his lapel. You read a section of his report and ask if he deliberately left out the apostrophe; you read another section and painstakingly work through a complicated phrase to mutually dumb it down into the simplest possible terms, then you ask him in front of the jury why he used such complicated words if the simplest terms are the equivalent. These are the strategies the uneducated use to discredit the educated. It's archetypal Reddit-speak; you spend two minutes googling something that you don't understand and don't want to and then you dangle it in front of the person who actually knows what he's talking about and say "somewhere in here is something that disagrees with what you said." And since the audience is also made up of people with no expertise who always want to feel smarter, you'll get the upvotes you need to score the points to "win" the debate. Here's the problem. The expert knows you're wrong. He's never stopped being an expert. And you're not debating him. You're not attempting to learn anything from him. You're trying to score useless internet points off an unseen audience of equally uneducated individuals because the actual experts left the forum long, long, long ago. _________________________ Fundamentally? I have nothing to learn from you. Theoretically? You're acting like you think you might have something to learn from me. Yet you're coming at me from a position of innocence, you aren't reading your own sources closely enough to understand them and you're putting forth the fundamental argument that since you (think) you scored a rhetorical papercut or two I'm going to go "gee, you're right, this is all fundamentally unknowable". Why would I do that? Ultimately, you're arguing that something I understand innately - ME - should not be considered superior to something else that I understand through effort and study - chatbots - because since you don't understand it, there's no way I can. Here, watch: That's quite clear. You, personally, don't think that I, personally, can reasonably claim ChatGPT has nothing in common with a brain. I'm five comments deep in responses to your hypothesis but you can't let go of what you think having equal merit to what can be known. And whether or not I'll be kind to your dog is not all that really counts: what counts is you wish to accord rights to a computer program without bothering to understand why experts think that's a bad idea.Genuine apologies for being combative. I got a little worked up at the insinuation that I am somehow trying to dispose of ethics. I do think that you could stand to be less combative yourself; even if you think I have absolutely nothing to offer you, you must think there's some value to having this discussion if you've gone this long, and I have to choose to continue as well.

ChatGPT is not a brain, but I don't think you can reasonably claim it has nothing in common with one.

I think the internet has left you far too jaded. I wouldn't claim to be an expert on AI, but I do have a degree in statistics and I work as a consultant. Implementing machine learning models is a part of my day to day life. This obviously doesn't necessitate rigorous understanding of the underlying math, but I know more than the average person. I certainly know what a Markov chain is. The naivete I was putting forward was about philosophy of mind, something I know hardly anything about. I attempted to engage with you on that subject, but you eventually refused, instead shifting focus to technical aspects of the question in which you put forward many inaccuracies. I did get pretty frustrated being talked down to by someone who is saying things that are simply wrong. I should have made my knowledge base more clear at the outset, and been more clear about what I was hoping to learn. The inaccuracies I'm referring to (claiming LLMs are lookup tables, are not neural nets, can not continue to learn, are Markov chains) are not "papercuts," they are fundamental to your dismissal of my discussion, and show a lack of understanding of the problem that I was hoping to discuss. If we're getting to the point of psychoanalyzing one another, then I might guess that you brought these terms up in an attempt to shut me down with math-y sounding words that came up in your casual reading. My use of the word "think" was an attempt to be polite. I'll rephrase: you can not reasonably claim that existing LLMs have nothing in common with brains. The sources you have provided do not claim they have nothing in common with brains, nor do they posit any insurmountable obstacle to them becoming brain-like. YOU are hand waving, YOU are starting from what you feel should be right, and YOU are too focused on rhetoric to stop and examine if you might be wrong. We may be commenting on an article from one expert saying it's a bad idea, but appealing to experts in general agreeing with you is treating this as if it's a solved issue. It is not. This was my first attempt at having a discussion on the internet in 5-ish years and I think you put me off for good. I understand given what you said just now (re: me engaging in Reddit-speak) if you're done responding. I don't intend to write another post, but I want to let you know that if do have more to say I will read it, just cause I think it's unfair to try and get the last word then take my ball and go home.

Please stick around, I enjoyed your contributions!