The human race has had a limited view of the sky, and the cosmos beyond. At first we had our own eyesight, then lenses, then telescopes, then non-visual spectrum sensors, and now a few satellite platforms for the same technologies.

As the move to habitation of the Moon and Mars moves forward, what does this mean for our cosmological viewing capabilities?

There is no atmosphere to obscure our telescopic vision from the Moon.

Mars' atmosphere is far less dense than Earth's, so that's also less-occluded view of the sky from Mars.

So, considering that some of our most interesting observations have been made by ganging up several terrestrial telescopes and using them in unison, what further capabilities might we gain from having permanent viewing platforms on Earth, the Moon, and Mars?

Are the orbital mechanics too difficult? While it is possible to calculate the correct viewing time/location on both Earth and Mars, would the window of opportunity simply be too small to view a single point in the sky from both vantage points simultaneously?

Do we leverage more asynchronous viewing, like they did recently with the black hole photos? (Have all three viewing platforms take images of Location X, then composite the images together later?)

It seems like this "tripod for cosmic imaging" idea would allow us "better" views of the galaxy... but I'm not smart enough to work out what "better" means, or what additional capabilities might be opened up when we have viewing platforms on multiple planetary bodies.

Anyone wanna try and think this through with me?

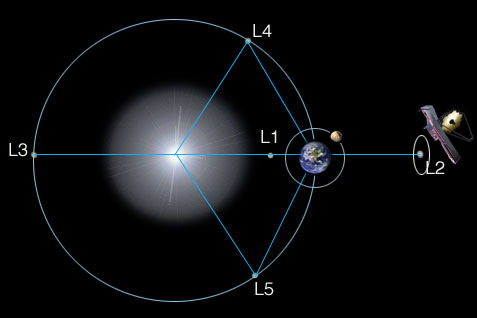

WAT. That is SO cool... I didn't know there was anywhere you could "hide" from the Sun permanently in our solar system. That's a revelation... (... and obviously, my storyteller brain is now crafting a scifi story that revolves around something painted Vanta Black hiding out in this "dark" spot...) "...One of the reasons that they are going to put the James Webb in orbit at the L2 lunar node is that the earth and moon block the sun creating permanent shadow..."

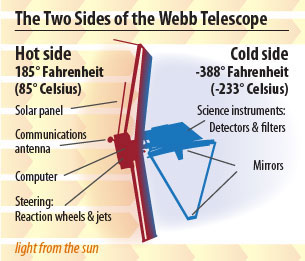

Had to look it up because I never realized it either. So the telescope would orbit the L2 node outside the shadow (solar panels need light) but all the bright things in the sky will stay right where they belong behind the sun sheild. https://jwst.nasa.gov/orbit.html The telescope itself will be operating at about 225 degrees below zero Celsius (minus 370 Fahrenheit). The temperature difference between the hot and cold sides of the telescope is huge - you could almost boil water on the hot side, and freeze nitrogen on the cold side! To have the sunshield be effective protection (it gives the telescope the equivalent of SPF one million sunscreen) against the light and heat of the Sun/Earth/Moon, these bodies all have to be located in the same direction. This is why the telescope will be out at the second Lagrange point. Heat problems in space are so weird when you're used to thinking about an atmosphere.Webb primarily observes infrared light, which can sometimes be felt as heat. Because the telescope will be observing the very faint infrared signals of very distant objects, it needs to be shielded from any bright, hot sources. This also includes the satellite itself! The sunshield serves to separate the sensitive mirrors and instruments from not only the Sun and Earth/Moon, but also the spacecraft bus.

Convection, conduction, radiation, man. Convection goes to zero because you have no fluid to interact with. Conduction stays exactly where it is. Radiation hits the roof because there is absolutely no dissipation between "hot thing" and "you." What's funny is the equations are actually hella simpler because "convection" is an empirical pain in the ass to calculate. parallel plate sinks get used most often not only because they're easy to extrude but because they have some of the least heinous math.Heat problems in space are so weird when you're used to thinking about an atmosphere.

francopoli to back me up 'cuz that d00d has forgotten more about telescopes than I'll ever know and am_Unition because he does space observations for a living and stuff but adding scopes up optically doesn't gain you that much. I mean it sort of does but at that point you're basically getting non-visual data in the optical wavelength, it's not like you get a better photograph out of it. Deformable mirrors and laser guide stars have basically allowed an entire generation of telescopes to stay relevant. You can virtually eliminate atmospheric perturbation with one. I went deep down the rabbit hole on this one because I figured if I was able to cobble my own MEMS array I could spy on spy satellites and shit which would be SUPER COOOOL but then I got to talkin' with the guys at Palomar and when you're talking "guide star" and "atmosphere" you're no longer talking "eBay lasers" you're talking "notify the FAA when you turn it on lasers" and that was about the time I realized that optics is kind of a settled science and that the engineers are applying materials science and state-of-the-art processing and manufacturing techniques faster than you really hear about as an amateur. I mean obviously big damn mirror in outer space is always going to win. People have been suggesting telescopes on the far side of the moon for 60 years or more. When you say "things are going to be super-great if we can ever put a 20-foot mirror out there at like, Lagrange 2 or some shit so that the thing is completely isolated" the astronomers will say "it already has a Twitter account." And when you say "asynchronous viewing" they say "you mean like when we look at something in December and then again in June so that our light-gathering is 2AU wide?" The black hole "photo" wasn't a triumph of imaging, it was a triumph of DSP. The signal they were working with wasn't anything new, really, it was the gob-smacking amount of post-processing necessary to cull the noise. From an imaging standpoint, the JWST is gonna be sumpin' else.

So, generally speaking, the parallax of placing your two imaging systems 54m kilometers apart, and synchronizing their observations, is not gonna reveal anything really exciting, or up-level our cosmic vision by much? Bummer. I thought it might provide 'interesting' benefits. In other news, the JWST is a sexy-looking beast, isn't it? It's nice when we evolve beyond "what else can we stick in a KH-11 box?" design ethos. :-)

Honestly, FOCAL sounds like a not-so-great idea, even after considering my not-so-optimistic mood. But perhaps more honestly, wtf is this, where the default masses are the sun and Earth, and I can input a fucking semimajor orbital axis in units of an Angstrom, but astronomical units (AU) isn't in the selection menu? Is it a joke?! It was one of the first google results. omfg, the only thing that'd be more infuriating is if google.com's default (edit: AMERICAN) conversion factor of 1 AU was in terms of miles... @#%*&(^$ YEP, I'M IN STUPIDTOWN. THANKS FOR REMINDING ME WHY I WRITE MY OWN CODE. ANYWAY, good luck getting to 550 AU in an appreciable time. New Horizons took about a decade to get to about 30 AU, and it was the fastest object ever launched by humans (relative to the Earth), although I think maybe JUNO or the Parker Solar Probe has probably since given it at least a run for its money, if not beaten the record. Then, you've gotta make a controlled burn to linger at 550 AU for an appreciable amount of time to collect photons. Solving that issue, you get to orbit the sun incredibly slowly and map out what's behind it over the course of the next several thousand years, which amounts to only taking data within a plane, plus or minus some little bit of thickness. Obviously, there would have to be as many science targets falling within this accessible plane as was possible, and you'd have to optimize the orbit for that, but I digress. Edit 2: and yeah, good luck with filtering out the solar corona's contribution to your data via recombination and all the other interactions, cuz it's dead center of your field of view / region of interest. I'mma address some of the other things in this thread tomorrow, because, for one thing, radio waves aren't only 2 or 3 times longer wavelength than optical, but when you get a notification via 'bl00 regarding DSP and you already had this tab open in your browser for what you were up to that day, it's time for another beer

It's there, though. At the 'scientific' section, they just didn't capitalise it and it's 'au'. Absolutely true, but if goobster could brush me off on pointing out that teleporting ~10^29 particles composing the human body would be beyond stupidly difficult and doesn't-work-like-star-trek (source), I assumed that anything optimistically achievable within 100 or so years is fair game. :Pastronomical units (AU) isn't in the selection menu?

anything practical you mentioned

If you're in a position of caring what the surface of expolanets look like you'd best be in a position to get out to 550AU in an appreciable time. No, I do not have a solution in mind. Just wanted to point out that I read "550 AU" yesterday, dug 1 level deeper than Wikipedia and determined that the scientific investment and rigor behind FOCAL makes Project Orion look NASA-grade.ANYWAY, good luck getting to 550 AU in an appreciable time.